Vision Systems

Believable Augmented Reality (AR) experiences require precision engineering and insight into how eyes and brains work together. Our people-centered approach is the foundation of our vision systems, helping devices understand the user's surroundings in real time.

Input to Immersion

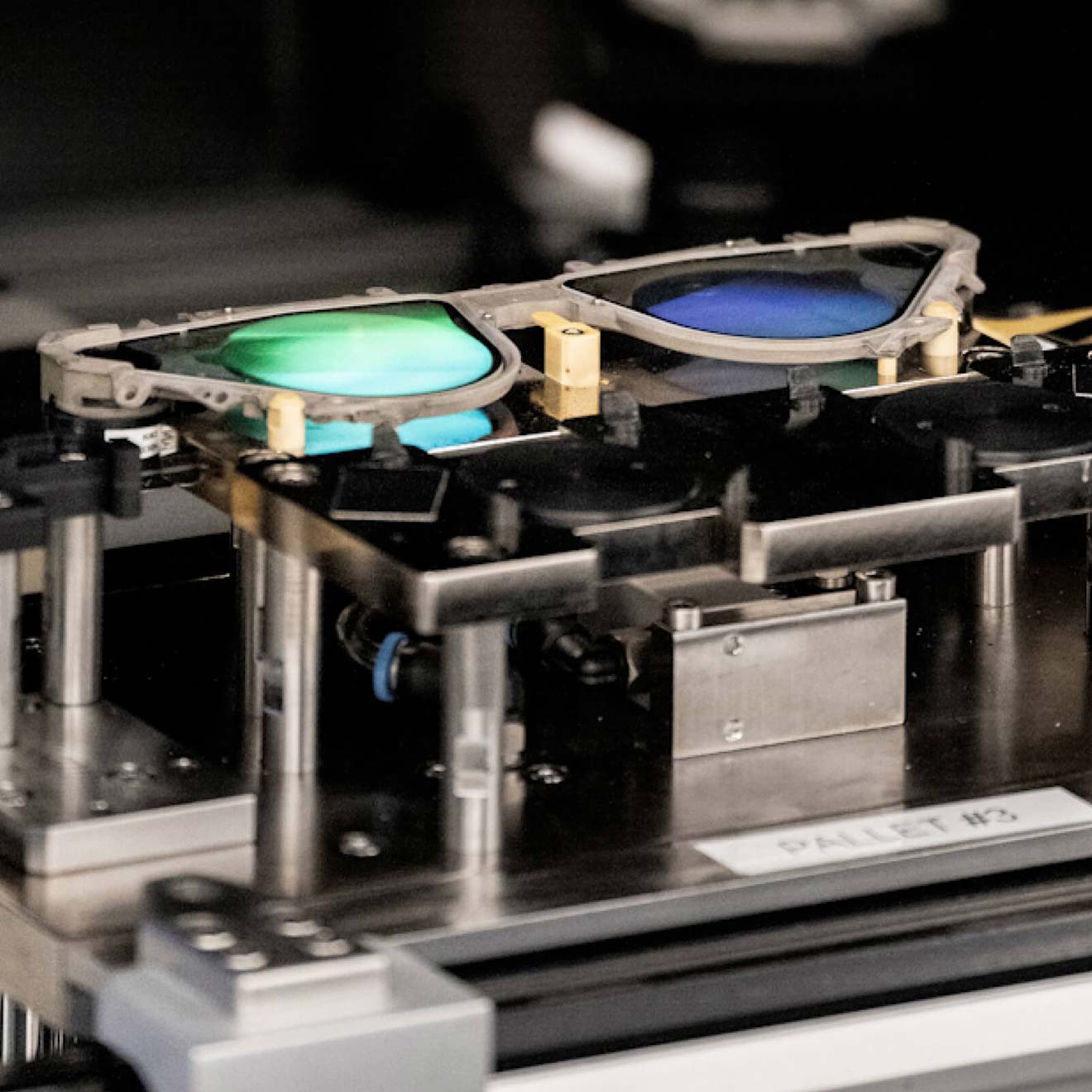

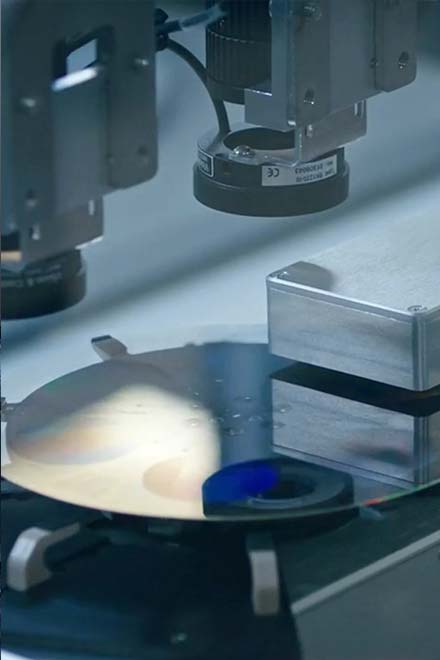

Inside our vision systems

Our perception of the world is complex — and our vision systems reflect that. We start by combining camera and sensor input with advanced computer vision and perception algorithms. Then, we deliver bright and crisp digital content using micro-displays, fine-tuned optics, and world-class waveguide displays. Together, these elements form a holistic end-to-end pipeline that delivers content seamlessly integrated with the physical world the user can see and interact with naturally.

The Eye-Brain Connection

Bringing things into focus

Our perception team has developed technologies that go far beyond eye tracking — they specialize in the human, eye, and brain connection. So while our state-of-the-art technologies can precisely track the movement of a user’s irises, they also track user attention — and intention — to enable content to be placed more appropriately and clearly in a space.

Projection + Displays

Photon to eyeball and back

We build our vision systems around the way light moves through the eye — and how the eye reacts. Our inter-disciplinary teams work hand-in-hand to optimize the interaction between projectors, lenses, waveguides, and complete AR device design. Every element is tuned for brightness, clarity, and visual consistency. The result? Digital content that blends seamlessly with the physical world, delivered by a device so comfortable it becomes an indispensable part of the user’s daily experience.

Perception-Driven Engineering

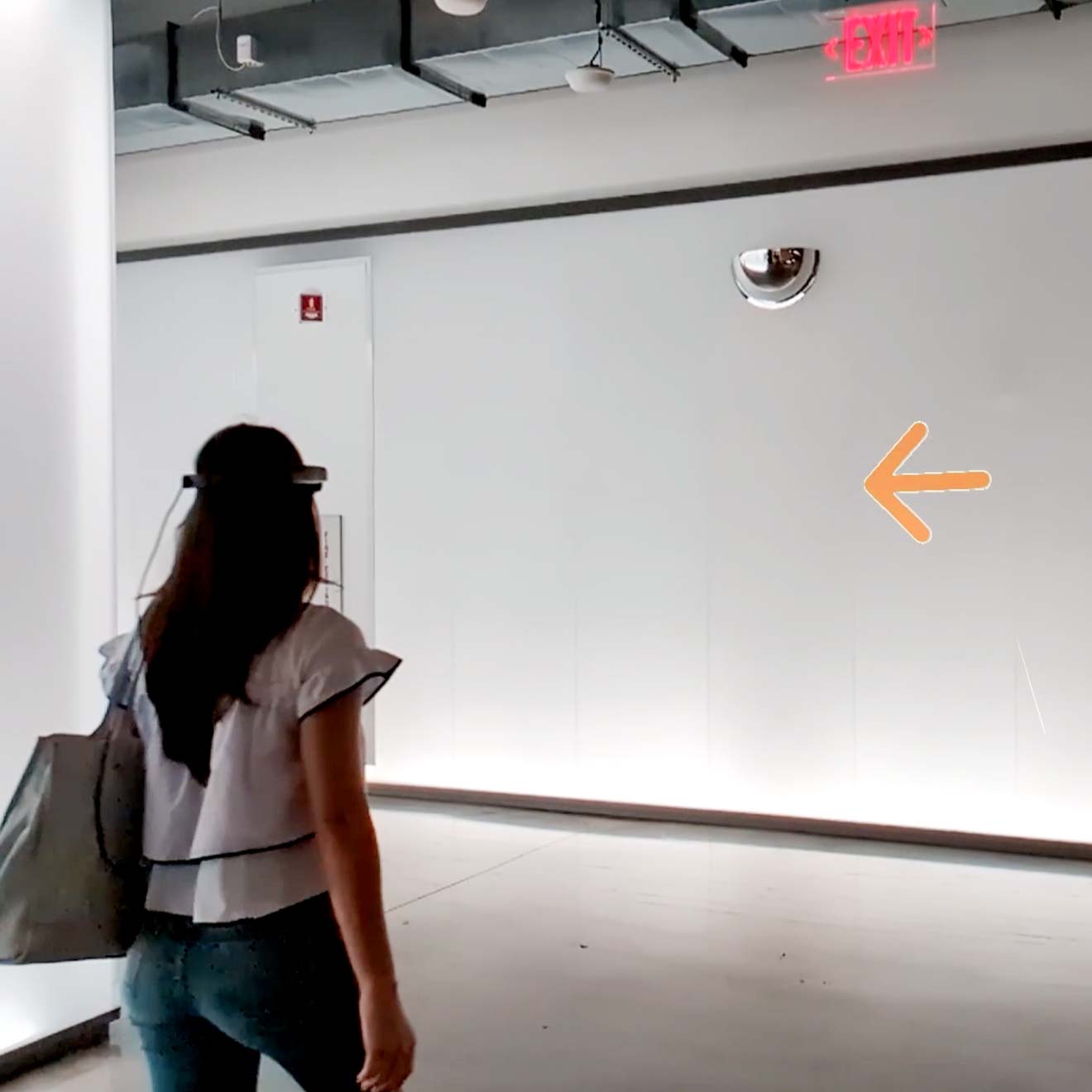

Digital content made real

It’s one thing to beam brilliant and crisp images to the eye — but for AR to truly work, those images have to feel at home in the physical world. That’s where perception engineering comes into play. Our vision systems are designed so virtual objects can behave like real ones. Whether it’s a navigation arrow anchored to the sidewalk or a reminder pinned to your door, digital content should feel natural.