News

April 12, 2023

Magic Leap collaborates with NVIDIA to advance digital twins for enterprise

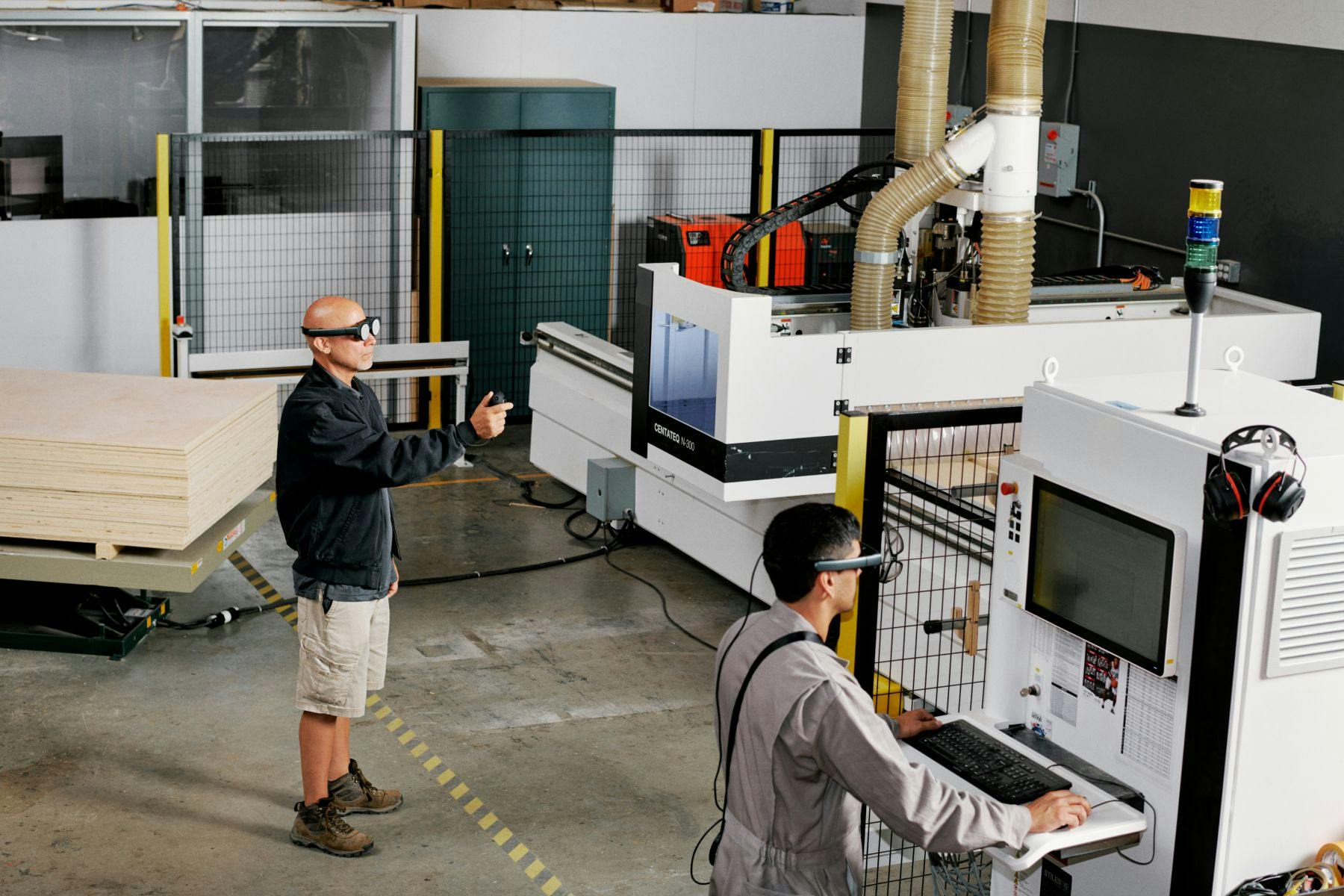

Magic Leap is pioneering immersive, photorealistic, ray-traced digital twins in NVIDIA OmniverseTM to create new efficiencies and value in engineering, architecture, retail, and other industries.

Magic Leap began its collaboration with NVIDIA to find a way to enable off-board computing and streaming of 1:1 scale 3D digital models to Magic Leap 2. This collaboration allows enterprise users to render and stream immersive, full-scale digital twins from the NVIDIA OmniverseTM platform to Magic Leap 2 without compromised visual quality and includes an extension integrating common enterprise modeling programs into the UI.

Magic Leap engineering teams pioneered key technical advancements that combine the industry-leading augmented reality (AR) capabilities of Magic Leap 2 with powerful NVIDIA RTXTM GPUs to deliver a new level of visual detail for 3D models in AR. Among the advancements are:

- Ray tracing and Deep Learning Super Sampling (DLSS) on a mobile AR stereo form factor for the first time—enabling content to be rendered with highly detailed, photorealistic quality.

- Magic Leap Dynamic Dimming™ technology, an industry-first technology that eliminates 99.7% of ambient light from the display to make AR usable in a greater variety of bright lighting conditions, makes digital content more clear and vivid, and heightens detail and realism with realistic shadows.

- Accurate environmental lighting and reflections across digital content deepen immersion and afford added dimension to design review.

- Depth-based reprojection capability that eliminates latency and anchors digital content persistently within a user’s environment.

These capabilities empower enterprises and professionals who create, design, and collaborate to render physically accurate, photorealistic digital twins to Magic Leap 2 from the popular enterprise modeling tools they already use, such as Autodesk Maya.

Enterprise value: more immersive digital twins open new possibilities

Magic Leap built its Omniverse extension to make design and 3D modeling more effective and efficient. Previously, this rendering would have required writing a bespoke mobile extended reality (XR) application—drastically reducing visual quality due to computing restraints.

The collaboration overcomes these limitations but also prioritizes ease of use and efficiency. Doing so enables new, profitable applications across industries:

- In our demonstration, which debuted at CES, we ray traced a full-fidelity digital twin of a hypercar model. The digital twin could be instantly modified in real-time. For the automotive industry, such high-quality digital twins can streamline design and eliminate the need for costly, labor-intensive scale clay models.

- Architects and construction professionals would be able to prevent costly rework orders by accelerating design iterations and making realistic 1:1 scale design reviews possible—spotting and planning required updates before construction even begins.

- In healthcare, digital twins can transform CT scans from a series of 2D images into interactive, 3D digital assets, enabling more detailed viewing and planning.

Greater efficiency: High-fidelity rendering with one click

3D content based on Universal Scene Description (USD) from Omniverse can be instantly viewed on Magic Leap 2 without decimation, simplification, or extra work.

Magic Leap created an Omniverse extension that renders content to Magic Leap 2 from the most common enterprise modeling tools, such as Autodesk Maya, without the need for conversions or optimizations. Content is stored in Omniverse Nucleus as USD files. In Nucleus, users can live edit and collaborate on the content.

Omniverse users can seamlessly start viewing their scenes on Magic Leap 2 with one click.

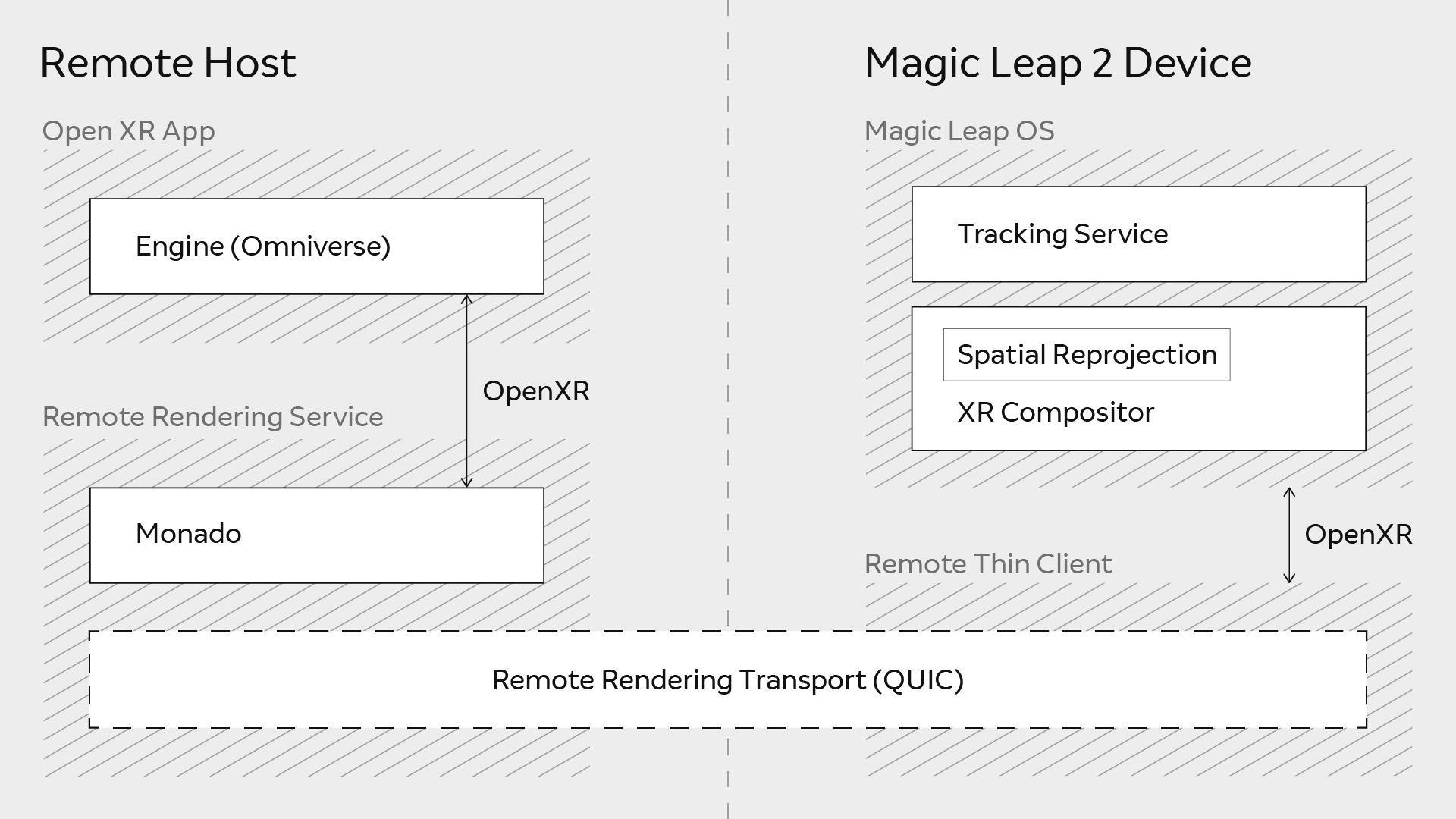

By simply clicking "View on Magic Leap", an image is rendered on the server and streamed to Magic Leap 2, where it is instantly displayed for the user in the correct position, orientation, and scale. Omniverse's core XR extension will automatically render the high-quality stereo images and submit them to the industry-standard OpenXR API.

The server-side OpenXR remote rendering runtime then leverages NVIDIA GPU acceleration to composite and encode the stereo images. These images are then transmitted over the network where they are received, decoded, and displayed on Magic Leap 2.

Magic Leap 2 is also constantly sending head poses, eye tracking, controller state, and other input data back to the server where it is surfaced to Omniverse through OpenXR. On the back end, other users can continue to live edit the USD content with changes instantly rendered and streamed to Magic Leap 2.

WATCH: Digital Twins in Augmented Reality with Omniverse, Holoscan, and Magic Leap

Ground-breaking visuals: Four tech breakthroughs that make it possible

By leveraging a number of Magic Leap 2 features and NVIDIA RTX GPUs, the solution mitigates common challenges like latency and unlocks incredible new visual details for AR models. Four of these breakthroughs are demonstrated below in screen captures from the Magic Leap auto-design demo at CES.

1. Reprojection mitigates latency and locks content in place

Latency is the biggest challenge when remote rendering and streaming files, designs, and models to a mobile AR device. By the time a stereo image has been rendered on the server, the user has likely moved from the device’s last-reported position. This causes digital content to appear to “swim,” or jump unsteadily around a user’s environment, rather than stay locked to the world, in turn, breaking a user’s immersion.

The NVIDIA OmniverseTM and Magic Leap architecture leverages OpenXR.

The solution is the depth-based reprojection capability on Magic Leap 2. The device uses both color and depth images for each frame to create a novel view of the scene at the actual position of the user, correcting the error in pose from the position at which the frame was rendered on the server. By doing so, content stays locked in position despite underlying network latency.

Together with techniques like headpose prediction and the precise timing of inputs and render loops, Magic Leap 2 can tolerate more than 100 milliseconds of end-to-end latency while maintaining an immersive experience for the user.

2. NVIDIA RTX ray tracing and DLSS enable photorealistic quality

NVIDIA RTX ray tracing and Deep Learning Super Sampling (DLSS) technology enable photorealistic quality. These capabilities were previously infeasible due to their intensive compute power requirements. Thanks to recent advancements in their technology, NVIDIA GPUs pack enough power to run such extremely computationally intensive tasks. And through our collaboration, we can now harness these powerful capabilities while rendering Omniverse content to Magic Leap 2.

Leveraging NVIDIA GPUs, we are able to display fine details like the textures of leather or metals in the interior of the model at the highest fidelity. Without ray tracing and DLSS, this would be impossible in a mobile form factor.

Photorealism: With NVIDIA Omniverse, Magic Leap users can see ray-traced photorealistic AR.

3. Dynamic Dimming™ technology makes content opaque and adds behaviorally-realistic shadows

Magic Leap 2 features the industry’s first Dynamic Dimming™ technology, a patented capability that eliminates 99.7% of ambient light from the display. Its Global Dimming™ feature eliminates light from the entirety of the display, while its Segmented Dimming™ feature eliminates only the light behind digital objects.

Using Segmented Dimming™, washout is avoided, and content is made more vivid, vibrant, and opaque. Realistic shadows are created within the user’s environment by applying Segmented Dimming™ around digital objects selectively, adding an industry-defining level of immersion to content.

Solid AR and Shadows: Magic Leap Dynamic Dimming™ technology not only enables AR objects like the car to appear solid (i.e., without significant bleed-through of natural light behind the object), but also enables AR objects to cast realistic virtual shadows on the real world.

4. Accurate environmental lighting and reflections ensure immersion

Historically, accurate environmental lighting and reflections have plagued the immersiveness of AR experiences. Magic Leap’s collaboration with NVIDIA tackled that problem to enable realistic lighting and reflections. At CES, we captured a 360° HDR image and generated accurate lighting and reflections in the window of the rendered car. We are actively researching ways to make this functionality even more realistic through dynamic lighting estimation.

Reflections: Lighting from the real world (e.g., the white pop-up booth) is reflected in the virtual car.

Continued collaboration to advance digital twins for enterprise

Magic Leap will continue our collaboration with NVIDIA and build upon our advancements in digital twin technology to deliver even greater value to enterprise users. With more immersive and accessible digital twins, teams can accelerate design, deepen collaboration, and transform design reviews and other experiences.

We are actively working with developers, customers, and partners to continuously improve and find new use cases for digital twins. Magic Leap’s integration with Omniverse is currently in early access, with an expected general availability release in the first half of this year.

If you’re interested in working with Magic Leap to deploy digital twins in your business, please contact Jiwen Cai at jcai@magicleap.com.

Latest Content

Blog

April 22, 2024

Improve precision and efficiency with Magic Leap 2 for AEC

Blog

April 2, 2024

Enhance manufacturing productivity and operational excellence with Magic Leap 2

News

March 25, 2024

NVIDIA IGX + Magic Leap 2 XR Bundle Now Available

Blog

March 22, 2024